Why You Should Care About Keeping the Data Center Cool

Closer monitoring of various hardware components will have an incremental benefit when it comes to data center energy efficiency, but the biggest opportunities — and challenges — come with keeping the environment cool.

“Cooling is by far the biggest user of electrical power in the data center,” says Steve Carlini, global director of data center solution marketing at Schneider Electric, a vendor of data center power and cooling products. “That area may take up to 40 or 50 percent of all the power going into your data center.”

That explains why IT managers use a variety of techniques to ensure that expensive computing equipment does not overheat or that the resulting energy bills do not break the operations budget. The challenges grow as IT managers more densely pack server racks into available real estate, thereby creating hot spots in need of specialized cooling strategies.

These techniques start with some simple, common-sense measures and extend to ambitious retrofit projects. Start with the low-hanging fruit of cooling management, advises Craig Watkins, product manager for racks and cooling systems at Tripp Lite, a vendor of power and cooling products.

First, install blanking panels in any open areas of server racks. “You can make a big impact by just blocking off those unused spaces, which will force your cold air through the equipment,” he says.

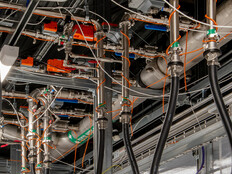

Another easy step is to use inexpensive cable managers to organize the mass of wires coming from the backs of servers. “If you have a rat’s nest of cables coming out of the server, the hot exhaust will hit that blockage and start backing up to your servers. You will not get the appropriate amount of CFM — cubic-feet-per-minute of airflow — through the server,” he says.

For a bigger impact, IT administrators can employ spot cooling to keep the air temperatures around dense server racks within acceptable ranges. Spot cooling typically uses piping that provides a conduit for refrigerant to flow near the racks. Fans assure that cool air reaches the heat sources.

Modularity is another selling point for these systems. Districts can quickly reconfigure the refrigerant piping to accommodate new equipment or redirect cooling to racks that experience a spike in heat output due to heavy usage. This technique also mitigates some of the expense data centers incur to power traditional subfloor fans to push cool air throughout the facility. The new, smaller fans installed above the racks do not have to work as hard to circulate cold air, thereby saving power.

Next, IT managers should evaluate the effectiveness of cold-aisle containment, a strategy that uses enclosures to wall off racks and keep cold air close to the servers rather than floating out into the larger data center.

“Cold-aisle containment increases the effectiveness and the performance of whatever cooling method you are using,” Carlini says. “You see a big efficiency gain.” He adds that, because cold-aisle containment systems are relatively inexpensive, their return on investment is quick — often less than a year.

But there are some disadvantages. “You are putting all the hot air into the room,” he notes. “So in some data centers, the air going into the servers is fine, but the ambient air in the rest of the room could be 100 or 120 degrees.”

Understanding the close relationship between power monitoring, power distribution and cooling technologies, some vendors are now offering packaged solutions that combine the necessary components for all three areas. Emerson’s SmartRow solution, for example, outfits data center racks with precision cooling, UPSs, power management, monitoring and control technologies within one enclosure.

Other Cooling Alternatives

On the flip side of cold-aisle containment is the use of enclosures to contain hot aisles.

“It is easier to add cold-aisle containment as a retrofit to an existing data center than hot- aisle containment,” Carlini says. “But hot-aisle containment is actually a more efficient way to control temperatures.”

These units allow for front and rear airflow, with cold air coming in through a front opening and hot air exhausting out the back, into a hot aisle. Chimneys in the roof of the enclosure vent the heated air.

“You can tap into the data center’s HVAC system to help move that heat out of the room so you do not have to cool the room down to meat locker temperatures,” Watkins says.

Other cooling methods include water-side economizers, which transfer server heat to water that then flows to an outdoor cooling tower, where ambient temperatures bring down the temperature before the refreshed water recycles back to the data center. Air-side economizers work in a similar way in climates where the outside air is relatively cool much of the year.

The Continuity Connection

The new emphasis on detailed power and cooling monitoring and best practices has an impact on business-continuity planning. Even minor power problems can damage precision servers and workstations, leading to costly downtime and equipment repairs.

“Power and cooling redundancy must be factored into the design of any high-availability data center,” says Ron Mann, senior director of data center infrastructure at Hewlett-Packard. “Of course, redundancy and the tier level comes at a price, so it’s important to factor availability requirements into the design to ensure an optimum balance of cost and performance.”

The first line of defense for continuity is the power-filtering and battery-backup resources available from UPS units. But UPSs are designed for short-term disruptions.

Problems lasting more than about 15 minutes require a more extensive contingency plan. That may mean software that sets off a controlled shutdown sequence to power down equipment without risking data losses.

Another option is contracting for power from more than one utility company. That way, if one provider’s supply becomes scarce or it experiences a blackout, power will flow from the alternative source.

Backup generators are another important option for longer-term events. But experts say it is important to prioritize their use. In the past, IT managers used generators to keep the UPSs that support servers and other critical computing devices running. Today, many plans give cooling systems a higher priority.

“Use the generators to first lock in your cooling systems,” says Jack Pouchet, director of energy initiatives at Emerson Network Power, a power and cooling equipment company. “Once they are online then move over to the UPSs.”

Rather than incurring the expenses of this wide-scale approach, some districts are targeting their continuity strategies to specific areas of their data centers. This involves installing redundant power resources to servers that run core enterprise applications and databases, which would cause long-term harm if they remained offline for even a short period of time.

Unintended Consequences

Although a wide range of new technologies and best practices are available to squeeze ever-higher levels of efficiency out of data centers, IT managers should resist the urge to chase every last kilowatt of savings.

“Pendulums sometimes swing too far,” Pouchet says. “Energy efficiency is one thing, but you should not sacrifice reliability, ease of access or ease of upgrading as you work to improve your power infrastructure. I have seen some organizations find that, as a result of their energy policies, it took them three times longer to refresh their servers than before."

For example, before commercial cold-aisle containment systems were readily available, some data centers built homegrown containment solutions. “They looked really good until the managers had to roll out a server rack, and they had to spend about two hours taking the containment enclosure apart,” he says. “No one thought through the process beforehand.”

Another data center ran into problems when it boosted the ambient air temperature circulating around its servers. The computers were able to handle the warmer temperatures, but the company saw diminishing returns when the next utility bill arrived.

“All of a sudden they saw their power bill go up,” Pouchet adds. The reason? In an act of self-preservation, each server began running its internal cooling fan, which increased the data center’s overall power draw.

“Data centers are their own ecosystems,” Pouchet says. “Little tweaks here and there may not seem like much, but at some point, if you go over a certain threshold, you may see unintended consequences that can be painful.”