6 Steps to Better Blade Management

Blades offer advantages over traditional rack-mounted servers, such as much more efficient rack density and a smaller footprint. But it's important to focus on cooling, plan for more power and take care of networking issues, such as managing IP addresses and making sure the equipment comes with enough high-speed ports to handle the organization's bandwidth requirements. Here are six tips to keep in mind while deploying blades.

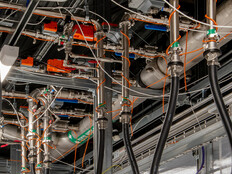

Tip 1: Focus on cooling.

Blade servers, by virtue of packing more CPUs into a smaller space, can create more heat than a commercial oven. Six blade chassis per rack, with 14 blades in each chassis and four 150-watt CPUs in each blade, generate 50,400W of heat, not counting the conversion losses from power supplies or heat generated by hard drives. With the potential to generate 50 kilowatts per rack, plan for appropriate cooling. There are cooling systems available from rack manufacturers and others that are designed to deal with the heat produced by racks full of blade servers and other high-density server installations. These often segregate the data center into hot and cold rows, or pull cold air in from the bottom of the rack and send it out the top as exhaust.

Tip 2: Plan on additional power.

With all the heat that blades generate, plan on needing additional circuits, or even 220-volt circuits. Depending on the servers, you may need either multiple 110-volt or dual 220-volt power supplies.

Tip 3: Find out the best way to access the blades.

In most cases, it's not possible to plug a keyboard, monitor and mouse into each blade. So accessing the blades, especially for BIOS configuration and initial OS installation, requires some additional planning. Some modern blade systems include keyboard, video, mouse (KVM) switch functionality, but others require connecting through a network management port that offers access (through either a dedicated application or a browser) to blades both pre- and post-boot. The upside to this is that even initial configuration can be managed remotely.

Tip 4: Take care of networking considerations.

Each blade will need at least one IP address, but generally there isn't an individual Gigabit Ethernet port on each blade. There is usually either a switch integrated into the chassis or, in some cases, a few higher speed 10 Gig-E ports that aggregate connections for all the blades. In either case, plan for one or more subnets, and use the chassis management application to assign addresses to each blade. Then set up routing and plan for enough bandwidth to each chassis so it can support the applications that will run on the blades.

Tip 5: Automate the rollout of OSs and patches.

With as many as 14 blades per chassis, manually provisioning each blade, not to mention OS updates, application installations and patches, could take up all of a system administrator's valuable time. Plan on ways to automate rolling out OSs and patches. This might include using a management server such as System Center Configuration Manager from Microsoft, or simply scripts that roll out Linux OS images.

Tip 6: Expect to deploy a storage area network.

Blades typically support one or two 2.5-inch drives or a shared storage array. The newest blade systems typically have a shared array that is connected to each blade via a high-speed interface such as Fibre Channel or Infiniband, but others still use the individual drives attached to each blade. To expand past the capacity of the system in either case, plan for a storage area network. The SAN will typically be an iSCSI system; because the blades don't have expansion slots for the needed adapters, adding Fibre Channel or SCSI to each blade is generally not feasible. This means that you may need to plan for aggregating multiple Ethernet connections for storage, and possibly dedicate a second virtual Ethernet port per blade for storage to separate it from standard network traffic.