High-Performance Computing Drives Research on Campus

When you’re building high-speed infrastructure for academic research, the normal rules of enterprise IT may not apply. That’s a lesson the University of Florida learned the hard way.

When UF created its Research Shield network in 2015, the university treated it like a traditional IT project — and quickly discovered that the process took too long, cost too much and wasn’t nearly nimble enough, says Erik Deumens, UF’s director of research computing.

To meet the requirements of a $40 million federal research grant, UF did succeed in standing ResShield up in less than 90 days and meeting the security mandates of the Federal Information Management Security Act (FISMA). But getting there required multiple teams of IT pros. It took another six months to configure ResShield for the needs of researchers, says Deumens. Even then, the virtual machines weren’t compatible with all the applications researchers needed to use.

Unlike large-scale enterprise projects, which can take years to build and last for decades, research projects may last six months or less before the grant money runs out. Researchers need to get up and running quickly and, as UF discovered, the network may need to support obscure software with exacting requirements.

That’s why in March 2016 the university launched another FISMA-compliant high-performance infrastructure, Research Vault, which runs virtual desktops on a smaller version of the university’s HiPerGator supercomputer. ResVault’s VDI environments could be spun up more quickly and configured to run a wider range of Linux and Windows software than ResShield, Deumens says.

Universities Need High Power on Small Budgets

Universities hoping to deploy high-performance computing (HPC) infrastructure are often trapped in a Catch-22. They need extremely powerful computers with high-speed connections, but they’re usually constrained by limited budgets.

“Research networks that need to support applications like 3D imaging can be very bandwidth-intensive,” says Jim Duffy, senior analyst for 451 Research. “They require more storage than enterprise IT, as well as connectivity to other networks and universities.”

What’s more, this infrastructure often deals with sensitive data — medical research, intellectual property or corporate assets — that requires robust protection. For example, to compete for the billions of dollars that the federal government invests annually in research and development, institutions often must demonstrate compliance with FISMA and other standards. But they must also adhere to the culture of openness expected in academia. In addition, universities need HPC infrastructure to attract top talent, notes Deumens.

“Faculty researchers need state-of-the-art systems,” he says. “They come up with these weird ideas — we want that, because our faculty is hired to try and do things that nobody has ever done before — and we need to support them. So we don’t have six months to plan. And using restricted data makes it even harder.”

Research Networks Have Massive Storage Needs

The sheer amount of data that a research network must handle is another factor that sets them apart. Whether produced by satellites, environmental sensors, lab equipment or computational simulations, the volume is massive, says Peter Ruprecht, senior HPC analyst for the University of Colorado at Boulder.

“An individual satellite can generate tens of terabytes of data per month,” he says. “Student records and university email don’t come close to that.” Researchers may also be required to keep that data for years after a project concludes. That’s why CU-Boulder created the PetaLibrary, which currently holds up to 2 petabytes of data, or the equivalent of 120,000 high-definition movies.

The majority of the data is archived using an IBM TS3584 Tape Library System, while the rest lives on an IBM DCS3700 hard disk array. When researchers need to access archived data, it’s transferred from tape to hard disc. When they’re done, the data automatically returns to tape storage.

The PetaLibrary balances researchers’ need to securely archive older data with their desire for the most cost-effective storage solution, says Ruprecht. To offset costs, CU-Boulder charges research departments to use the PetaLibrary. Even so, it doesn’t store medical records or classified data, because there is insufficient demand to justify the expense of handling sensitive information, he adds.

Still, the university decided PetaLibrary was well worth the investment.

“When we started, we had a National Science Foundation grant that expired after three years,” Ruprecht says. “Once the university realized what an important service PetaLibrary provides, they decided to provide funding to keep it going indefinitely.”

Making the Network Work for Experimentation

In some cases, the network itself is a research project. Such is the case at Cornell University, where computer scientists have built a programmable network for experimentation purposes. The Cornell Open Science Network (COSciN) uses software-defined networking gear that can be programmed on the fly, which greatly simplifes network administration.

“Software-defined networking is an emerging technology that’s mostly found today in large data centers, but it’s likely to be used by all networks in the future,” says Nate Foster, an associate computer science professor who helped create COSciN.

COSciN connects the university’s data centers in Ithaca, N.Y., to its Weill Cornell Medical College in Manhattan, 231 miles south.

Transferring a massive, multi-terabyte file containing human genome data using a traditional network could take a week, Foster says. It was faster to simply load the data onto hard drives and ship them via inter-campus bus.

The SDN, however, automatically chooses the optimal path for data, cutting the transfer time from days to hours. The network can also automatically react to changes, like equipment failures or a new device coming online.

IT Provides Flexibility to Tinker

COSciN also provides a sandbox where researchers can try out new ideas — like the Frenetic family of network programming languages Foster is developing — without impacting the security or integrity of data on the university’s IT network.

“Our IT department has been great about finding ways to allow us to tinker without having some grad student taking down the whole Cornell network,” says Foster.

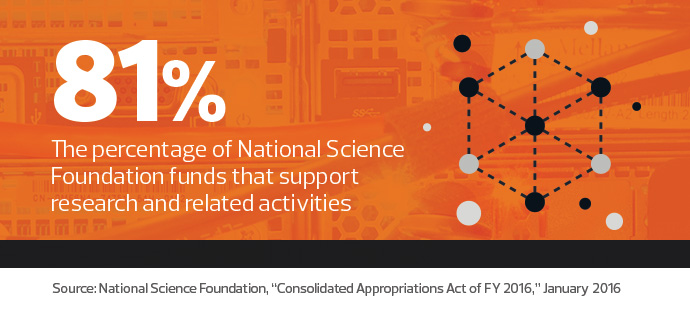

Of course, creating an intelligent, self-correcting network comes at a price. COSciN was developed with a $1 million grant from the National Science Foundation, using a special fund set aside for upgrading infrastructure for university research.

The question of funding remains the biggest difference between the enterprise and academia, says UF’s Deumens.

“Research happens in a different economy,” he says. “In the real world, you might see a feature you need and just pay for it. In the research world, that isn’t true. The only way research can stay competitive is that the price of computing has dropped so significantly that it can be done for the same amount of money, or even less.”