All Systems Go: Networking to Support Multicampus Colleges

Ivy Tech Community College of Indiana operates 32 campuses in more than a dozen regions around the Hoosier State. Until recently, the community college system also had 14 different networks.

“Each of the regions was set up like its own franchise,” says Ryan Blastick, executive director of statewide IT operations. “They had their own network; they had different hardware. The specifications and the age of the equipment were all over the board.”

The hodgepodge infrastructure created significant management hurdles. For one, disparate hardware meant that only certain IT workers were familiar with the idiosyncratic equipment and configurations in various regions, limiting flexibility around maintenance. The aging infrastructure often caused problems with performance, in some cases even preventing campuses from deploying new products. Each region was also on a separate wireless network, so users had to navigate separate login processes if they traveled between campuses.

Ivy Tech centralized IT services several years ago, and then moved to standardize the wireless platform statewide. But it was a massive systemwide network refresh, completed last summer, that truly brought the network together and set the stage for Ivy Tech to boost performance, increase network stability and support staff efficiencies.

Ivy Tech’s situation is not that uncommon. Many college and university systems with multiple campuses have seen varied infrastructure take hold in separate geographical locations. Some institutions, such as Kennesaw State University in Georgia, have seen their network architectures become more complicated through mergers with other institutions. In both cases, IT administrators often find that standardization can relieve management headaches, while improving network performance, reliability and visibility.

Nolan Greene, a senior research analyst in IDC’s Network Infrastructure group, says there has “definitely been a move toward standardization” among organizations with multiple locations. “It reduces the time spent on troubleshooting and keeping the lights on when you have a standardized, easily managed network infrastructure,” he says.

Taking Down Network Access Barriers

Prior to the network refresh, the majority of Ivy Tech campuses were only able to provide a 100 megabits-per-second connection to desktops. That wasn’t adequate for classes that used bandwidth-intensive applications such as engineering and drafting software. The backbone of the network was mostly limited to 1 gigabit per second, and the network lacked redundancy, with single points of failure presenting risk to availability. The network also lacked sufficient Power over Ethernet (PoE) capability to support IT products that Ivy Tech wanted to deploy, such as additional wireless access points and a statewide, standardized Voice over IP phone system.

“There were issues in multiple campuses where the network was going down, whether that was related to power issues and not having the proper battery backups or uninterruptible power sources to supply the power, or if it was just that we had this old hardware that was failing,” Blastick says. “With the single points of failure within the regional networks, if a piece of hardware failed, depending on whether that region had sufficient spares, a campus could be down for as long as four hours while a replacement was brought onsite.”

During the network refresh, Ivy Tech deployed Cisco Systems hardware to help beef up its backbone to 10Gbps — “something totally unheard of inside our campuses,” says Blastick — and it provided 1Gbps connections to desktops. It deployed Cisco Catalyst 2960 Series switches in closets and Cisco Catalyst 4500 Series switches at the network core, as well as Cisco 2921 Integrated Services Routers for its remote virtual private network sites.

All told, Ivy Tech deployed well over 900 pieces of hardware across 100 physical locations, Blastick says.

In addition to boosting performance and reliability, the upgrades brought a level of consistency to Ivy Tech’s network that allowed it to better utilize staffers’ time. “Before, we relied heavily on local people because they knew how the regional network was built,” Blastick says. “Once we standardized, we could take down those regional barriers, and it didn’t matter which network person you called. They would be able to support everything remotely and understand how the network was designed. It simplified the support model.”

A Central Foundation for Tech Innovations

The University at Buffalo in upstate New York, which operates three campuses, found that a centralized IT function provided a strong base from which to carry out major technology initiatives. For example, the university recently overhauled its wireless network, bringing Gigabit Wi-Fi to students, faculty and staff with a combination of Aruba Networks 802.11ac Wave 1 and Wave 2 access points, along with Aruba AirWave for network management and Aruba ClearPass for policy management.

Ron Ternowski, manager of network engineering, says that having multiple campuses “complicated things from a logistics standpoint” during the deployment. For example, officials wanted to prioritize academic areas over social areas, and IT had to coordinate this prioritization across three sites. However, Ternowski says that centralization of IT, which occurred about a decade ago, has made overall network management much simpler. “Without centralization, you have to worry about interoperability between vendors, worry about routing protocols,” Ternowski says. “It would be a monumental task.”

Single-Vendor Solutions Streamline Maintenance

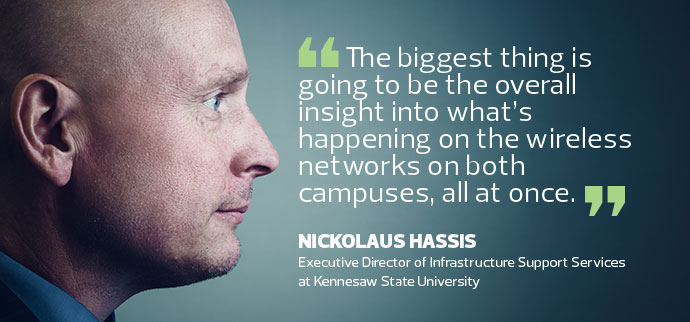

Kennesaw State University took a similar approach when it merged with Southern Polytechnic State University in early 2015. The two institutions’ IT departments had less than nine months to prepare before joint classes began the next fall.

Almost immediately, Kennesaw State’s IT team began working to connect the two campuses via two 10Gbps links on the state’s PeachNet System. That allowed the institutions to share local area network-based applications without using a virtual private network connection, and to authenticate wireless users on both campuses.

“That was so key to our success,” says Nickolaus Hassis, executive director of infrastructure support services.

The wireless infrastructure at the two campuses featured hardware from different vendors, but both Wi-Fi networks were reaching end-of-life, presenting an opportunity to implement a single, unified solution. A refresh with Cisco hardware is currently under way.

“It will be much, much easier when everything is on one vendor,” Hassis says. “The biggest thing is going to be the overall insight into what’s happening on the wireless networks on both campuses, all at once.”

The university refreshed the core switching at the Southern Polytechnic campus, installing Cisco equipment. It also installed new ports in Southern Polytechnic classrooms, where up to six workstations had been sharing a 1Gbps connection. Another priority was upgrading the data closets at Southern Polytechnic and replacing aging cable — steps necessary to ensure the network could handle critical services like VoIP. In some cases, data closets were sharing space with janitorial equipment. Many closets lacked power to fully support PoE, and some were overheating because they lacked stand-alone air conditioning units. The university upgraded power supplies, installed cooling and replaced any gear that had reached end-of-life.

“Until we did those upgrades, we weren’t confident that the voice solution would provide the sort of service that people expect,” Hassis says.

Designing Compatible Campuses

The University of New Hampshire has four primary campuses, including its law school in Concord, which became part of the university when UNH acquired the Franklin Pierce Law Center in 2010.

After the merger, getting the law school onto the same voice platform as the university’s main campus was a priority, says Dan Corbeil, UNH’s IT operations manager. The law school’s system was outdated, and university officials worried about it failing or being hacked.

The university also moved quickly to replace the law school’s aging data center infrastructure, deploying new Cisco hardware to make the facility compatible with the main campus.

“We’re a very efficient shop, and one of the reasons we’re so efficient is because we have standards,” says Corbeil. “It was really important for us to get to a centralized platform that was consistent.”