Step Inside University of Colorado Boulder’s Virtual Environment

Creating a virtual desktop environment is technically challenging, insanely elusive, and perilously expensive. So why even try? Because the benefits, once achieved, are extraordinarily profitable and dynamic.

How better to reduce support of the growing hundreds or thousands of staff computers needing to be fixed, replaced and serviced than to eliminate them altogether with a zero client that needs zero care and feeding? How much greater would your ROI be if costs were cut in half or two-thirds and R&R could extend twice as long? What if the speed in upgrading software applications depended on upgrading only one copy of the software? Or if your desktop could be accessed anywhere, anytime, on any device? What other environment can deliver all those dividends? Our virtual desktop infrastructure (VDI) has accomplished all of that and more.

Building a New Environment? Start from Scratch

For more than two years, the Housing and Dining Services department at the University of Colorado Boulder has benefited from the innovative work of a small IT staff who created and deployed a stable and successful VDI to the department's 600 employees. That's not to say we didn't experience a few misadventures along the way.

Other universities have inquired into the technical details of our final solution, and we're happy to share specifics on the storage element — the most critical component. While there are other solutions out there, success depends mostly on whether the VDI will be built from scratch or retrofitted to existing hardware. Adding software layers to correct or enhance existing hardware not designed for VDI adds complexity in troubleshooting through those layers, usually resulting in significant cost increases for licenses and increased risk of an unacceptable endpoint experience for users.

Define Success

When staff can't distinguish between using a zero client or a standard desktop computer, we consider that a success. As it turns out, VDI runs faster in our environment because applications process on Cisco's UCS blade servers and Nimble Storage arrays, not on a limited desktop computer. Additionally, the staff enjoy mobile access to their desktops now, a definitive (and compelling) factor in overall staff acceptance.

True mobility can come only from private cloud deployments. It increases productivity from staff who can access files and large critical business database systems securely from remote areas, mobile devices or even equipment that's otherwise past its prime.

Storage requirements for VDI generally are understood to be more IOPS-heavy than traditional storage workloads, and our experience as early adopters of VDI technology confirms that. Over the past five years, we have gained production VDI experience on several traditional and hybrid storage solutions. The long and winding road to VDI success had led us to a technology stack based on Nimble Storage, Cisco UCS compute, Cisco Nexus/ASA network and VMware ESXi/vSphere/Horizon View.

How It Works for Us

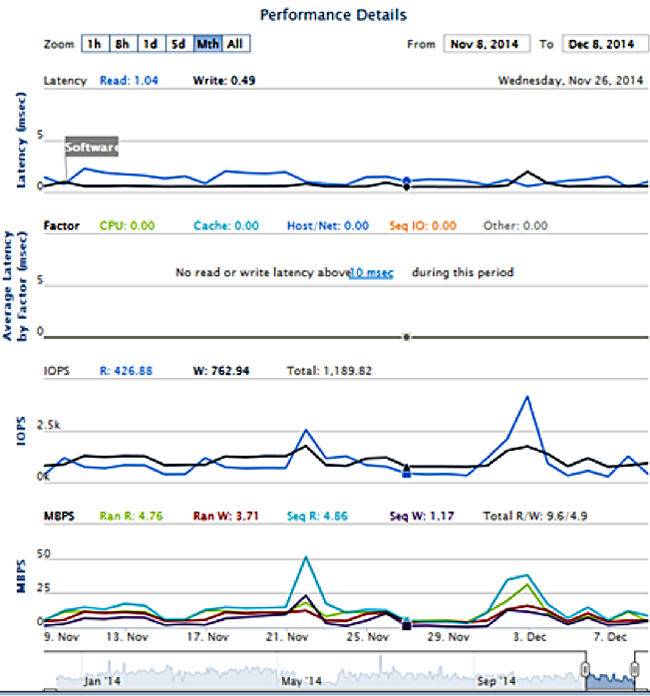

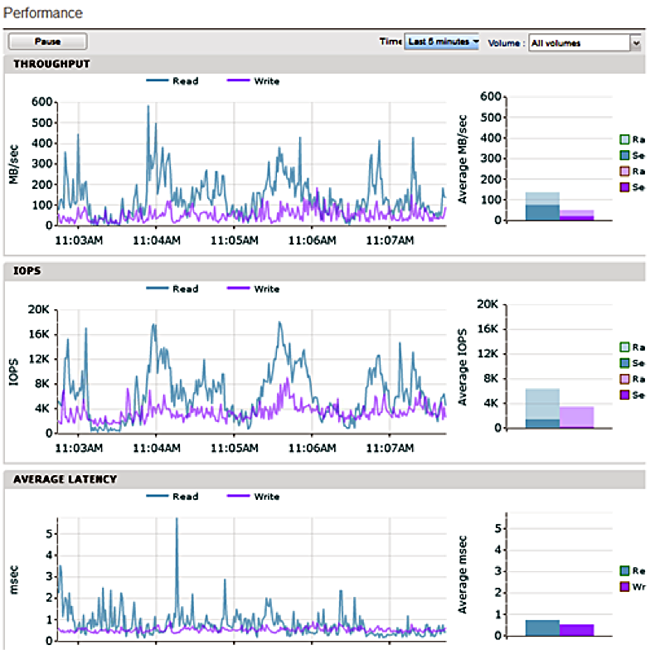

Workload: Currently, our active production VDI runs on one Nimble CS440G-X4 storage array (2.4TB solid-state drive, 12-processor core, 16 to 32TB capacity) and one ES1-H65 expansion shelf (45TB raw). The array is physically connected to Cisco Nexus 5548s switches via 10-gigabit-per-second copper. VMware ESXi/vSphere/Horizon View connectivity is Block level iSCSI. The stack supports about 600 administrative and business users with an average of 225 concurrent sessions. That generates an average workload of about 2,000 to 7,000 IOPS sustained at about 40 to 300 megabits per second throughput on the CS440G-X4. With that workload, our average read/write latency stays close to 1 millisecond — well within acceptable levels for our environment.

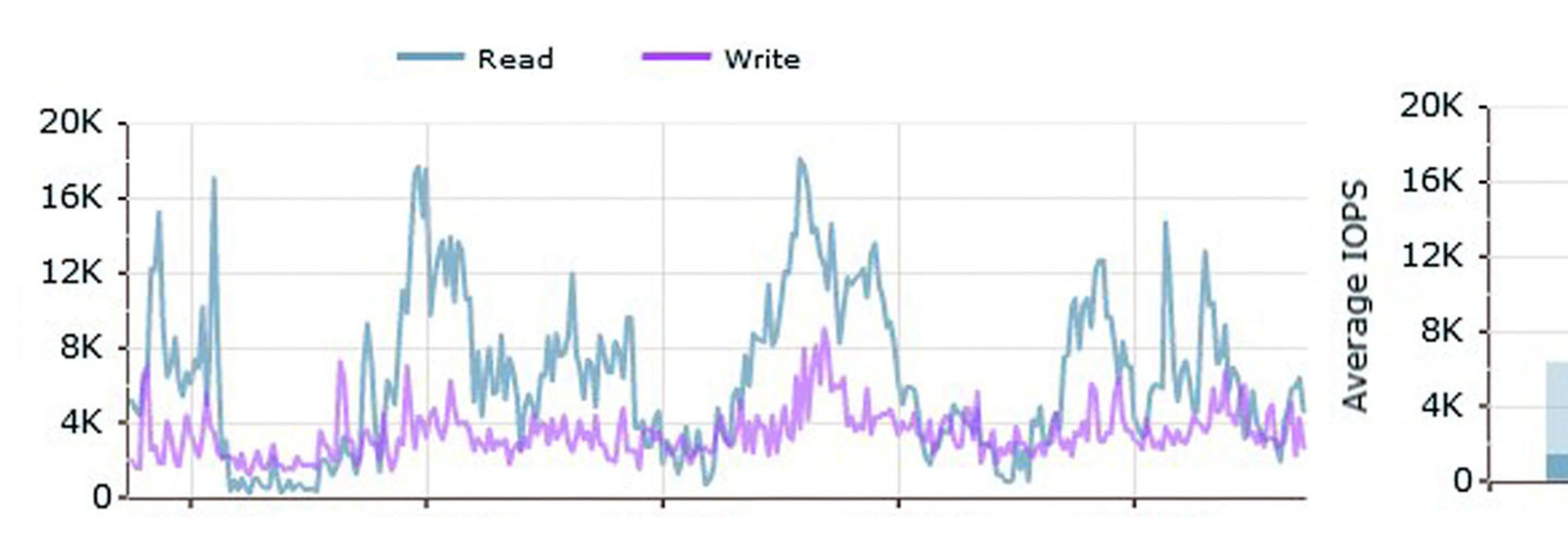

In our environment, boot storms (service degradation that occurs when a significant number of end users boot up within a narrow time frame), concentrated logon times and recompose events can generate shorter, more intense read-heavy storage workloads of about 20,000 to 70,000 IOPS for periods of five to 30 minutes or more; however, our average read/write latency remains very low.

Speed: Our virtualized data center architecture contains 150 virtual servers and more than 150 databases running on one Nimble CS440G-X4 array. These virtual servers and databases are a mix of Ubuntu Linux, Windows 2k8/2k12, Microsoft SQL and MySQL. These generate an average workload of 20,000 to 30,000 IOPS at 200 to 300MBps throughput while the array maintains very low average read/write latencies, normally less than 1 millisecond.

Performance: Unlike other storage solutions we have used in production, the Nimble CS440G-X4 arrays sustain our workloads gracefully, with no negative effect on desktop performance or user experience. To better understand how our storage performs relative to various events in our environment, we obtain analysis and predictive metrics using a combination of port 80/443 access to the storage array management interfaces and port 80/443 access to Nimble InfoSight. For example, we can directly correlate spikes in average read latency to our cache hit rate percentage, IOPS or throughput. We can see cache churn percentage as a percentage of small and large I/O. We also use the predictive analysis in InfoSight for capacity and performance planning. Using the tools and metrics Nimble provides, we are able to tune and optimize our storage performance.