In movies, the fastest way to convey a futuristic world is by giving characters the power to control their environment with a simple wave of the hand.

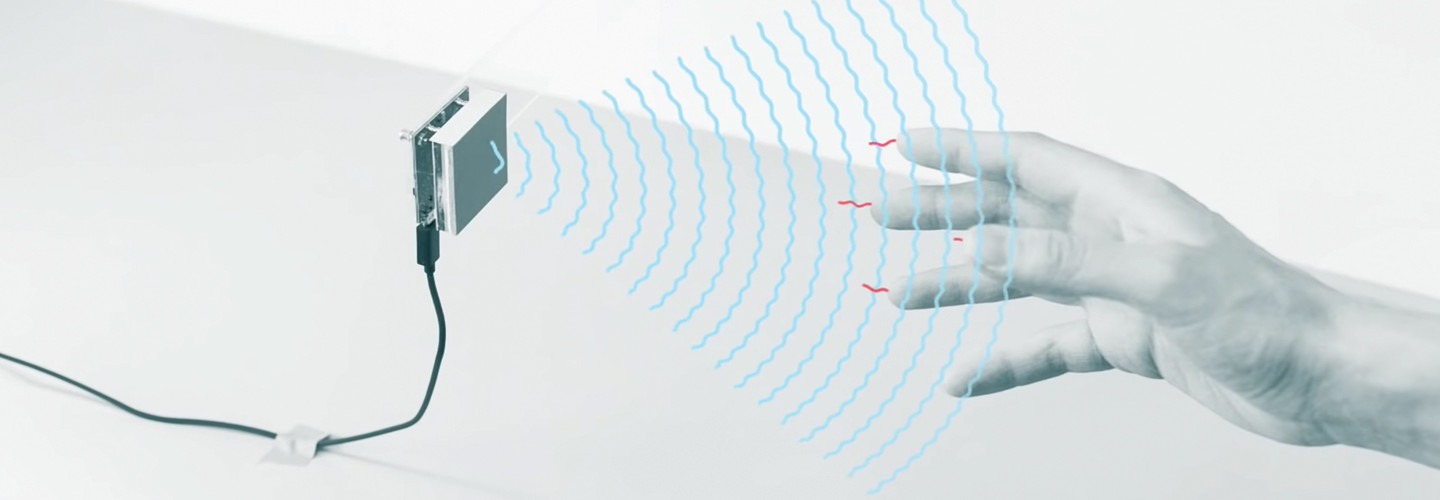

Gesture control isn’t ubiquitous yet, but the technology is progressing. In January, the Federal Communications Commission approved Google’s Project Soli, a sensing technology that uses miniature radar to detect touchless gestures.

“With the FCC approvals, Google can develop the gesture technology at a faster pace,” says Thadhani Jagdish, senior research analyst in the information and communications technology practice at Grand View Research, to eventually “transform an individual’s hand into a universal remote control.”

MORE FROM EDTECH: Check out how interactive technology is changing the higher education learning experience.

Microsoft Kinect Puts Content Control in Users’ Hands

Gesture technology is already being put into use in diverse applications, Jagdish notes. In South Africa’s O.R. Tambo International Airport, a coffee company installed a machine that analyzes travelers’ facial gestures and dispenses a cup of coffee if it detects someone yawning.

Samsung launched a TV that supports motion control for changing channels, playing games or using the internet. Leap Motion, which develops hand-tracking software, has an input device that helps a user control a computer or laptop with hand movements.

On campuses, motion-sensing devices can be useful in lecture halls and other spaces that rely on shared screens and collaboration platforms.

10

The number of feet from which the Microsoft Kinetic system can detect gestures

Source: MarketWatch, “Gesture Recognition Market Size, Key Players Analysis, Statistics, Emerging Technologies, Regional Trends, Future Prospects and Growth by Forecast 2023,” April 11, 2019

Rich Radke, a professor of electrical, computer and systems engineering at the Rensselaer Polytechnic Institute, says staff there use the Microsoft Kinect, which lets them point at a screen to change the content.

“This kind of technology enables the control of your pointers and your cursors on large displays where it would otherwise be very cumbersome to use your own mouse and keyboard,” he says.

Doug A. Bowman, a professor of computer science and director of the Center for Human-Computer Interaction at Virginia Tech, describes the technology as “still somewhat of a novelty,” but agrees it has big potential, especially in augmented and virtual reality.

“You don’t necessarily want to be holding a specialized control to interact with virtual content,” he says, adding that Microsoft’s HoloLens AR headset can recognize hand gestures to allow users to click buttons, choose menu items and swipe from one screen to the next.

Grand View estimates that the global gesture recognition market will be worth $30.6 billion by 2025, up from $6.22 billion in 2017.

Hand Gesture Tech Will Facilitate Collaboration in Higher Education

In higher education, says Bowman, gesture technology will “increase classroom interaction and allow students to see, learn, understand and interact with the environment, thereby creating an interactive digital world around them.”

2

The number of core gestures recognized by Microsoft’s HoloLens

Source: Microsoft, “Gestures,” Feb. 23, 2019

One challenge, he notes, will be standardizing the types of gestures that devices recognize, just as taps and swipes are consistent across various models of smartphones.

“Researchers and designers need to say, ‘What is the minimal set of gestures that will allow us to do most of the things we want to do?’” he says.

Google